Stable Diffusion playground

Launch an interactive web app for Stable Diffusion

This example deploys a simple web app for Stable Diffusion. You will learn how you can set up an interactive workload for inference — mounting models from Hugging Face and opening up a port for user inputs. For a more in-depth guide, refer to our blog post.

Try it on VESSL Hub

Try out the Quickstart example with a single click on VESSL Hub.

See the final code

See the completed YAML file and final code for this example.

What you will do

- Host a GPU-accelerated web app built with Streamlit

- Mount model checkpoints from Hugging Face

- Open up a port to an interactive workload for inference

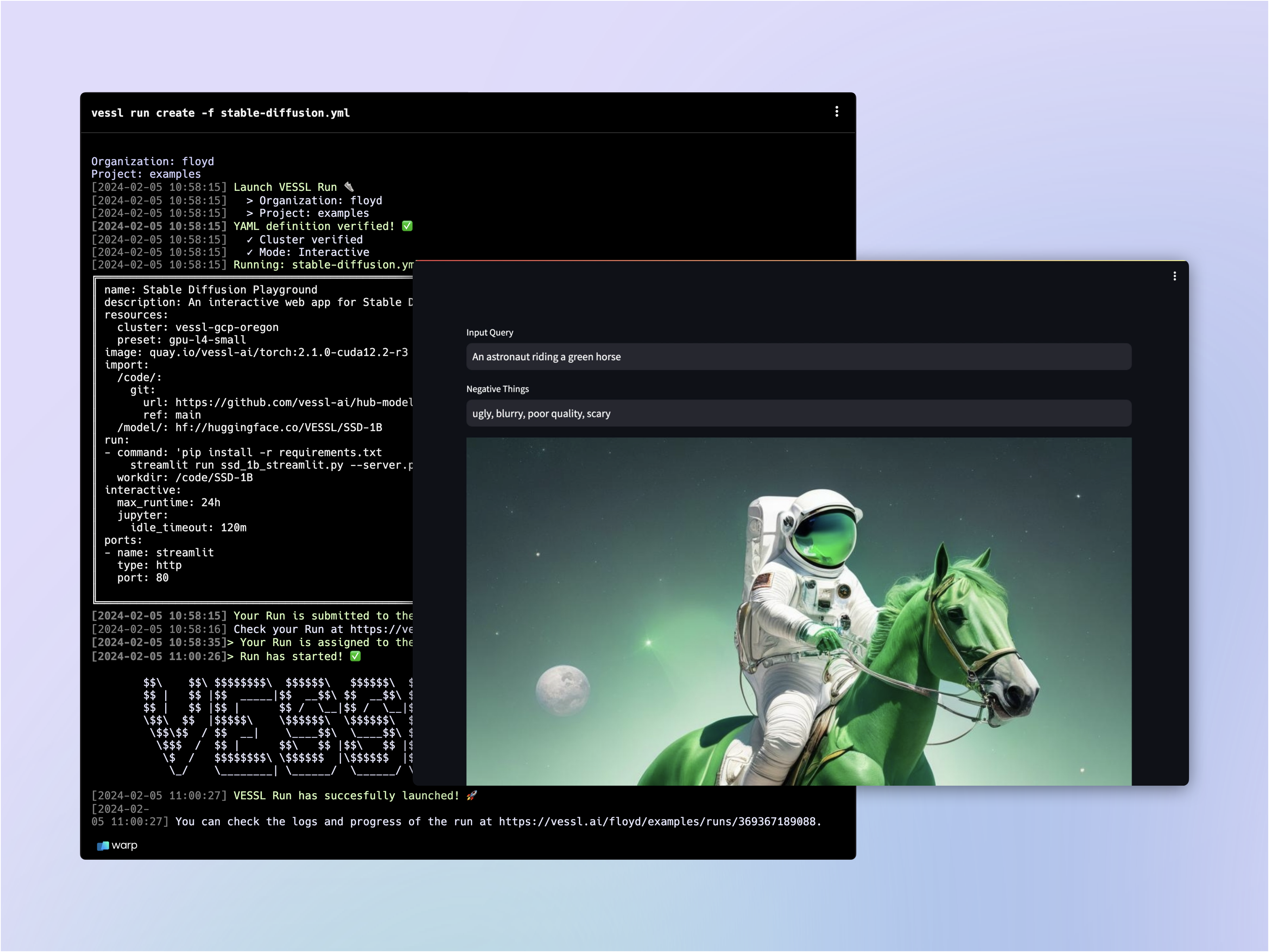

Writing the YAML

Let’s fill in the stable-diffusion.yaml file.

Spin up an interactive workload

We already learned how you can launch an interactive workload in our previous guide. Let’s copy & paste the YAML we wrote for notebook.yaml.

Configure an interactive run

Let’s mount a GitHub repo and import a model checkpoint from Hugging Face. We already learned how you can mount a codebase from our Quickstart guide.

VESSL comes with a native integration with Hugging Face so you can import models and datasets simply by referencing the link to the Hugging Face repository. Under import, let’s create a working directory /model/ and import the model.

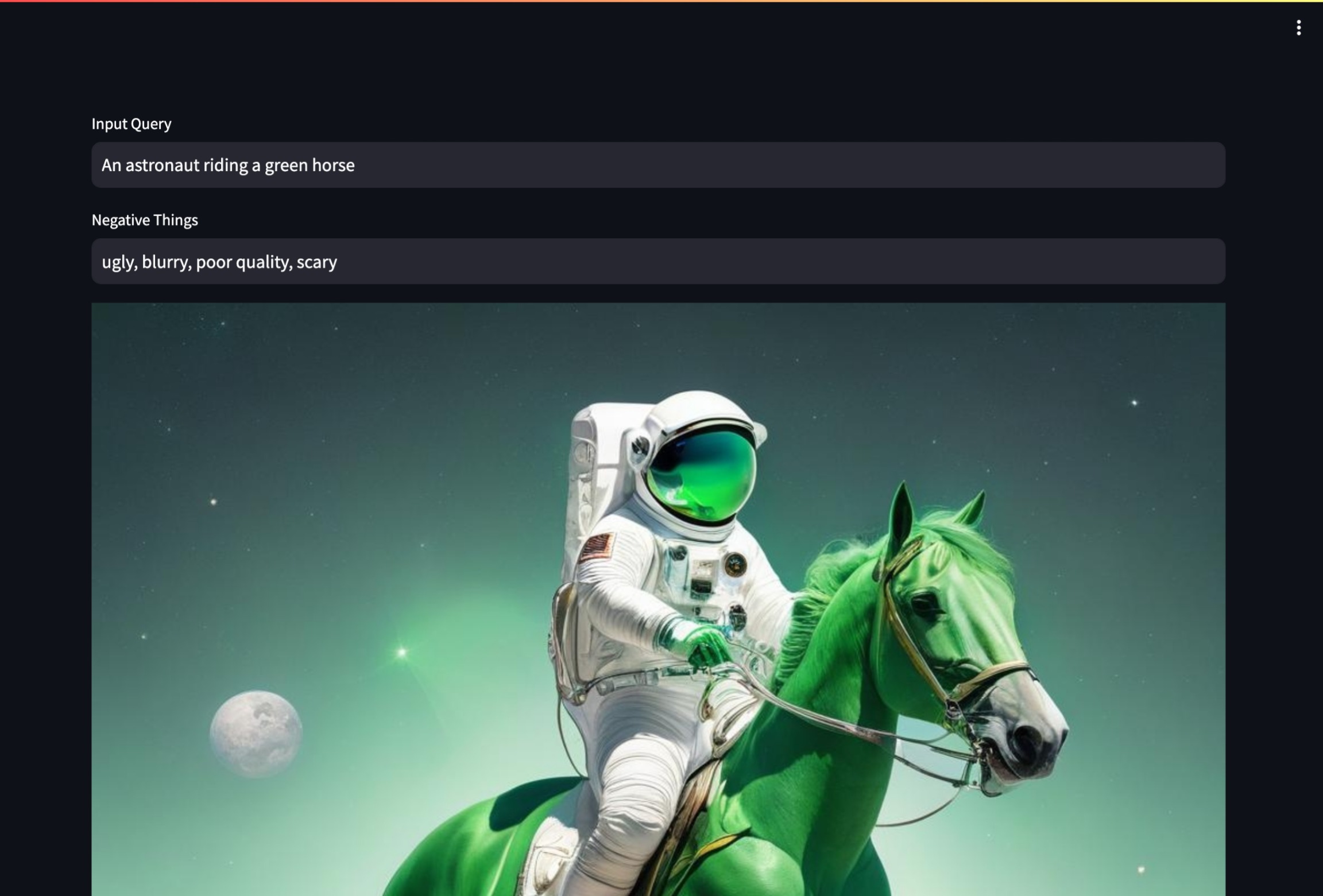

Open up a port for inference

The ports key expose the workload ports where VESSL listens for HTTP requests. This means you will be able to interact with the remote workload — sending input query and receiving an generated image through port 80 in this case.

Write the run commands

Let’s install additional Python dependencies with requirements.txt and finally run our app ssd_1b_streamlit.py.

Here, we see how our Streamlit app is using the port we created previously with the --server.port=80 flag. Through the port, the app receives a user input and generates an image with the Hugging Face model we mounted on /model/.

Running the app

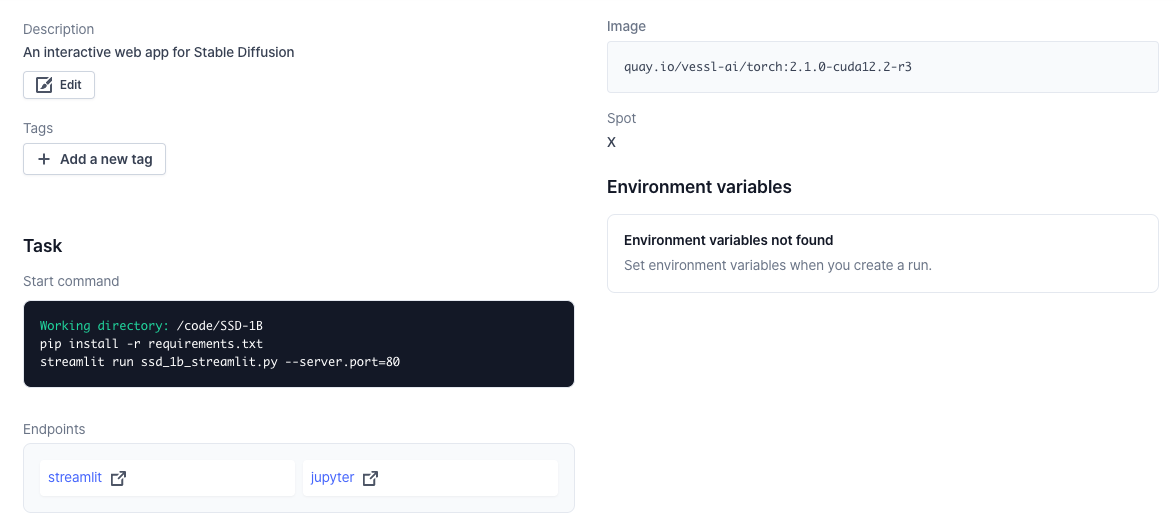

Once again, running the workload will guide you to the workload Summary page.

Under ENDPOINTS, click the streamlit link to launch the app.

Using our web interface

You can repeat the same process on the web. Head over to your Organization, select a project, and create a New run.

What’s next?

See how VESSL takes care of the infrastructural challenges of fine-tuning a large language model with a custom dataset.

Llama 3.2 Fine-tuning

Fine-tune Llama 3.2-3B with instruction datasets

Llama 3.1 deployment

Serve & deploy vLLM-accelerated Llama 3.1-8B

Enable Serverless Mode

Deploy Serverless mode using Text Generation Inference(TGI)